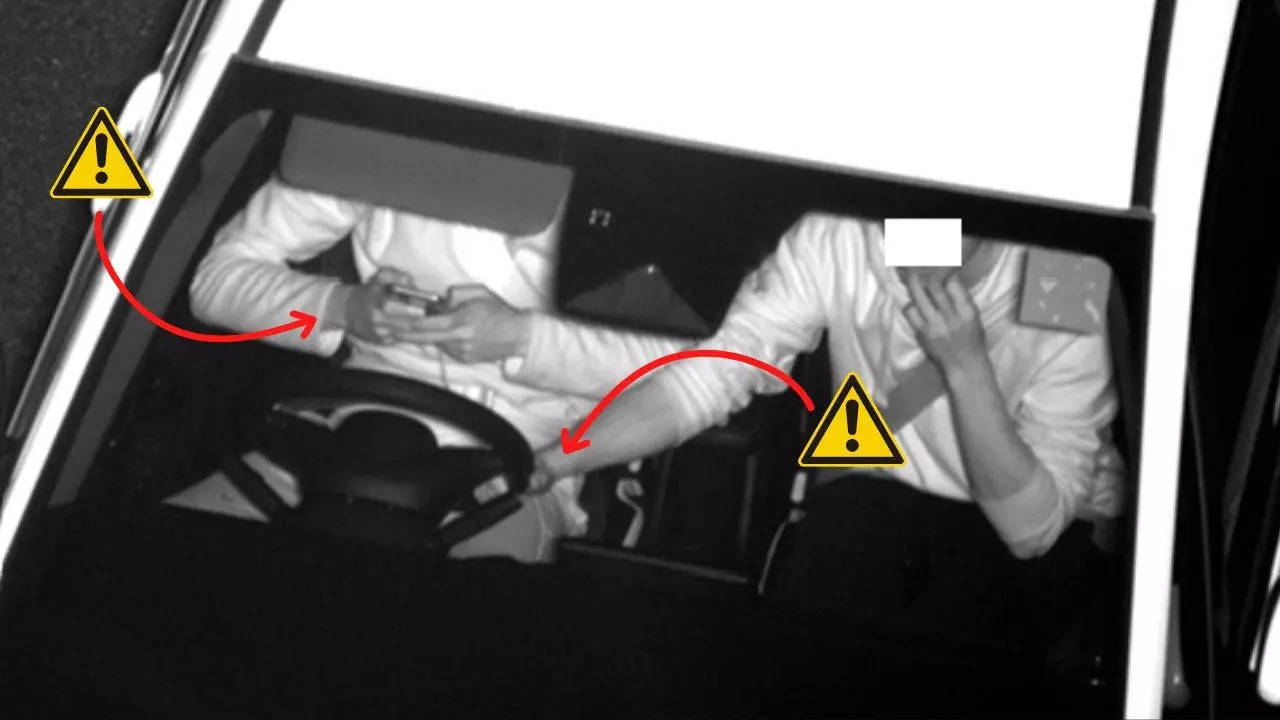

Police in England installed an AI camera system along a major road. It caught almost 300 drivers in its first 3 days.::An AI camera system installed along a major road in England caught 300 offenses in its first 3 days.There were 180 seat belt offenses and 117 mobile phone

The article didn’t really clarify that part, so it’s impossible to tell. My guess is, they tested the system by intentionally driving under it with a phone in your hand a 100 times. If the camera caught 95 of those, that’s how you would get the 95% catch rate. That setup has the a priori information on about the true state of the driver, but testing takes a while.

However, that’s not the only way to test a system like this. They could have tested it with normal drivers instead. To borrow a medical term, you could say that this is an “in vivo” test. If they did that, there was no a priori information about the true state of each driver. They could still report a different 95% value though. What if 95% of the positives were human verified to be true positives and the remaining 5% were false positives. In a setup like that we have no information about true or false negatives, so this kind of test setup has some limitations. I guess you could count the number of cars labeled negative, but we just can’t know how many of them were true negatives unless you get a bunch of humans to review an inordinate amount of footage. Even then you still wouldn’t know for sure, because humans make mistakes too.

In practical terms, it would still be a really good test, because you can easily have thousands of people drive under the camera within a very short period of time. You don’t know anything about the negatives, but do you really need to. This isn’t a diagnostic test where you need to calculate sensitivity, specificity, positive predictive value and negative predictive value. I mean, it would be really nice if you did, but do you really have to?

Just to clarify the result: the article states that AI and human review leads to 95%.

Could also be that the human is flagging actual positives, found by the AI, as false positives.

You wouldn’t need people to actually drive past the camera, you could just do that in testing when the AI was still in development in software, you wouldn’t need the physical hardware.

You could just get CCTV footage from traffic cameras and feeds that into the AI system. Then you could have humans go through independently of the AI and tag any incident they saw in a infraction on. If the AI system gets 95% of the human spotted infractions then the system is 95% accurate. Of course this ignores the possibility that both the human and the AI miss something but that would be impossible to calculate for.

That’s the sensible way to do it in early stages of development. Once you’re reasonably happy with the trained model, you need to test the entire system to see if each part actually works together. At that point, it could be sensible to run the two types of experiments I outlined. Different tests different stages.